Patrick Emami, a doctoral student in the UF Department of Computer & Information Science & Engineering (CISE), is part of a research team that worked on a project aimed at using sensors and machine learning to optimize the decisions made by intelligent traffic intersection controllers (ITICs).

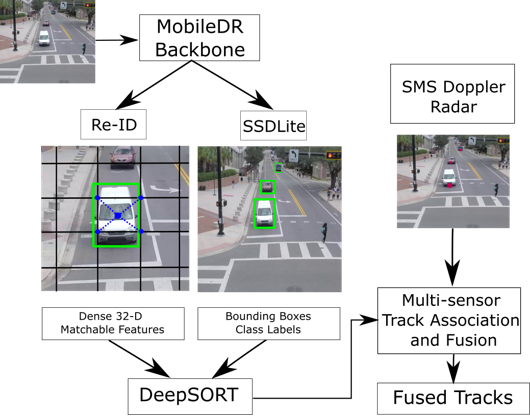

Specifically, Emami worked on creating a framework that used multiple off-the-shelf sensors and state-of-the-art deep learning for edge computing to capture information about all road users around a traffic intersection. This involved specifying a novel deep learning architecture and training algorithm as well as a multi-sensor fusion strategy.

The project, “Simple & Online Tracking of Objects Near & Far at Traffic Intersections with Video & Radar,” was funded by the National Science Foundation (NSF) and led by Dr. Sanjay Ranka, a professor in CISE. The research is part of a series of projects associated with I-STREET – the UFTI’s smart test bed which evaluates and deploys advanced technologies including connected and autonomous vehicles, smart devices, and sensors to enhance mobility and safety.

Intersections that are inadequately managed lead to traffic congestion and accidents. However, a solution that is being proposed in the literature is to adapt decisions made by traffic signal controllers based on the current traffic around the intersection. For example, intelligent ITICs try to jointly enhance signal timings and vehicle trajectories in mixed traffic environments (conventional, connected, and autonomous vehicles) in real-time. UTICs need an accurate, efficient, and cost-effective way to get information about all road users both near and far from the intersection. However, existing sensing frameworks fail to achieve a good trade-off of all three desirable qualities.

Findings from the study suggest that video-based tracking with deep learning algorithms is not enough for the 3-D tracking of vehicles far from intersections. This is mostly because accuracy is sacrificed to make them run fast enough for real-time applications. However, when the video tracking is combined with radar, the results are more positive.

“We found that the video tracker augmented by a radar was able to achieve the best tracking performance overall both near and far from the intersection,” Emami said. “Specifically, the fused solution strongly improves the video tracker performance at distances between 65 meters and 200 meters away, and it strongly improves the radar at distances closer than 65 meters away.”

Overall, this project has generated algorithms that will be published in an open access journal and the software produced with become part of an ITIC system licensed through the University of Florida’s patent office.

This project has promising effects for society and practitioners because it has the potential to reduce travel times which translate into cost-saving for the general public.

“This research has positive implications for urban road users since we have shown in simulation that ITICs with a detection range greater than 150 meters are able reduce travel times by 38-52% in mixed traffic environments,” Emami said. “The proposed 3D tracking system directly helps make ITICs more practical for deployment.”